Building The India Portfolio #5 – Optimizing Your LLM Usage

Optimizing Your LLM Usage: Beyond Prompts

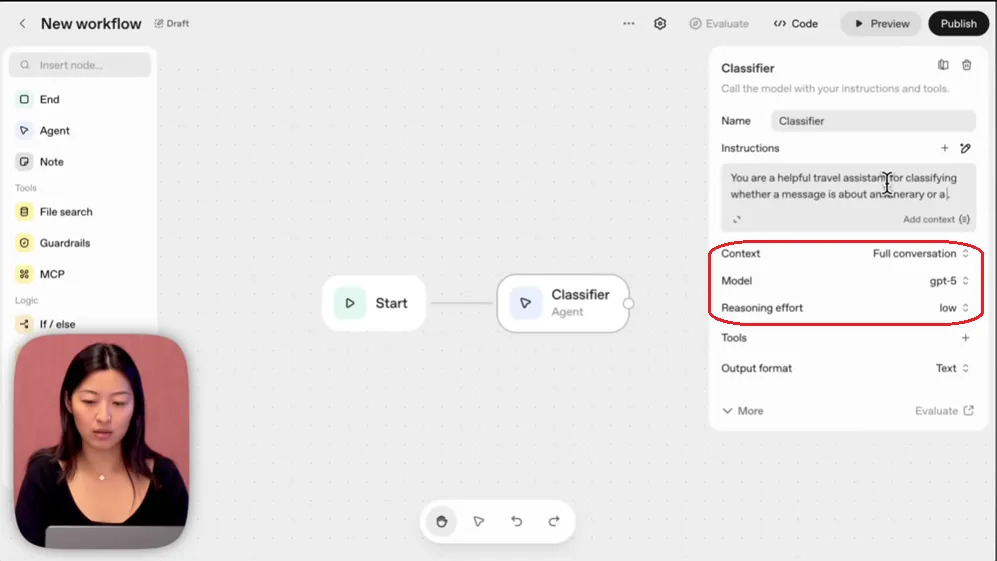

See for instance this excellent demo of Agent Builder by OpenAI. In this we are shown the ease of creating agentic workflows with an example of a travel assistant here with two workflow branches -

- build an itinerary

- look up flight information

Source: Link

When building your own agentic workflows, the important things to keep in mind is that you don’t have to have make calls to the LLMs in every step. The demo video does it to show the capabilities of their tool - don’t draw the wrong lesson from these demos.

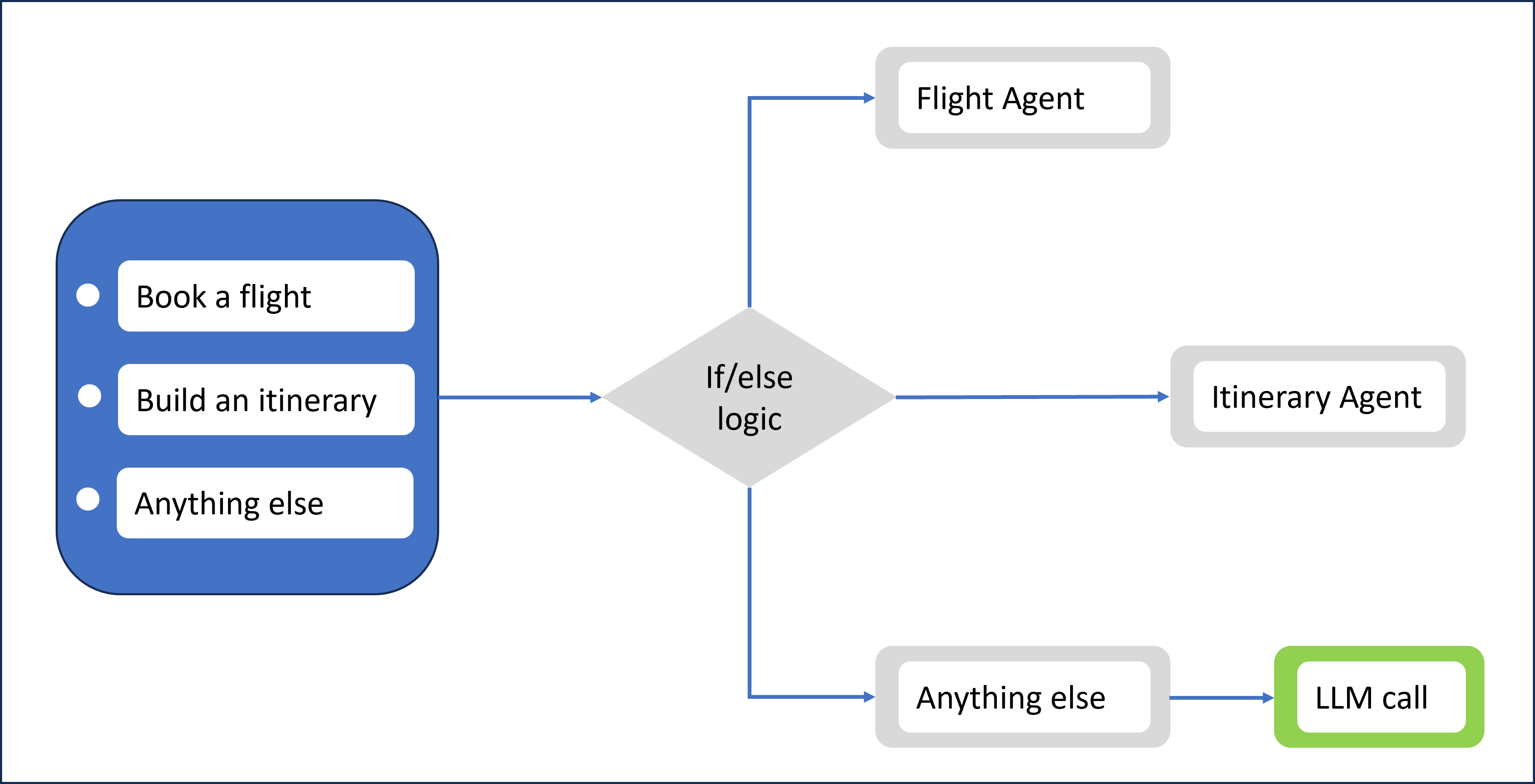

Your design choices should lean towards simplicity and efficiency i.e. using code over LLMs as much as possible. A classifier that will direct the user query towards a flight agent or an itinerary agent, does not really need a model call. The old approach of giving the user two options to choose from - book a flight or build an itinerary then just use feeding the user’s choice into an if/else statement to direct the workflow, works just fine. If you must, add a third option, which calls the LLM API for any other inputs.

That way you can cut down calls to the LLM API to only when necessary and save on inference time and token costs and also reduce errors. Remember, LLMs can hallucinate, code doesn’t. Errors in code can be traced and fixed, while LLMs can be a black box. Not to speak of the extensive and additional testing that agentic workflows might need if a different model needs to be used.

In the previous post on this series of Building The India Portfolio, we introduced our Prompt Optimizer tool to help you cut down task heavy prompts to retain the qualitative/reasoning /unstructured search & locate type tasks that actually need LLMs and exclude the quantitative/deterministic tasks that can be handled using code & structured search.

Building The India Portfolio #2 – Now Search Funds & Companies by Sectors, Get Detailed Info Pages on Companies

Building The India Portfolio #4 – Optimizing Your LLM Prompts: A Guide to our Prompt Optimizer Tool

Read full story →

That design philosophy should to be taken forward to all parts of agentic workflow design. It is convenient to offload tasks and workflows to the LLMs - we get that. We have been there while building The India Portfolio. But, the the old way of writing code is not to be abandoned. The future of agentic workflows is a mix of code and calls to frontline LLMs, because costs and accuracy matter in production.

In another post we will also analyze the future of small language models.

But first, we need to get back to -