Attention Is All You Need (Except When You Need Comprehensiveness)

Let’s put gemini-2.5-pro to the test on one simple task - provide us with a comprehensive list of “things of a kind”. gemini-2.5-pro is arguably one of the best LLMs in terms of comprehending user queries well and in its ability to weave in data from live web searches to deliver good quality output.

Besides, most frontline LLMs have focused on launching their deep research tools, which can source and analyze large datasets and deliver crisp summaries at speeds & scale beyond human capabilities - so much so, that it can be tempting to plug in these deep research outputs into your work or decision making.

But halt! As you will see in this simple experiment below (and that you most likely will be able to replicate at your end, results wise), that LLMs, even with their current processing horsepower and access to multiple relevant data sources, get tripped easily.

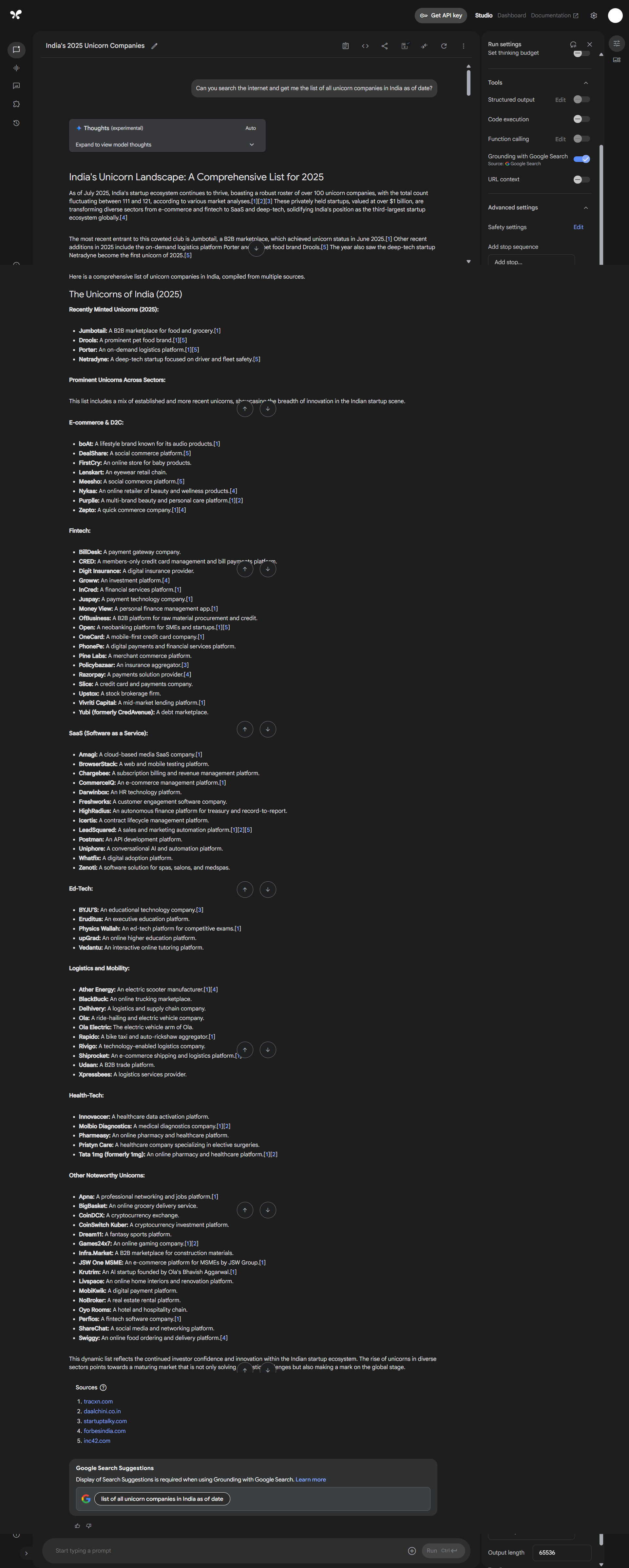

In Google AI Studio, with the “grounding with google search” switched on, I asked gemini-2.50-pro - “Can you search the internet and get me the list of all unicorn companies in India as of date?” This is what it returned -

It returned 82 names, including current & former unicorns and cited its sources. The sources that it used have the unicorn count in the range of 111-121 (which gemini did note right at the beginning of its answer!). And yet, the list of names it returned, falls short in count and in spite of gemini using the right sources, which already had data in ready to use format for it.

And mind you, this is one of the simpler of such “find all things of a kind” as you literally have the answer available as a list in several of the underlying sources. Sure each source may have a marginally different list and I would have accepted the response, if it had returned any one of these lists or an intersection of these lists, as its comprehensive answer. But no, when you let the LLM choose what data to look at, even with the right data being available, things can go wrong.

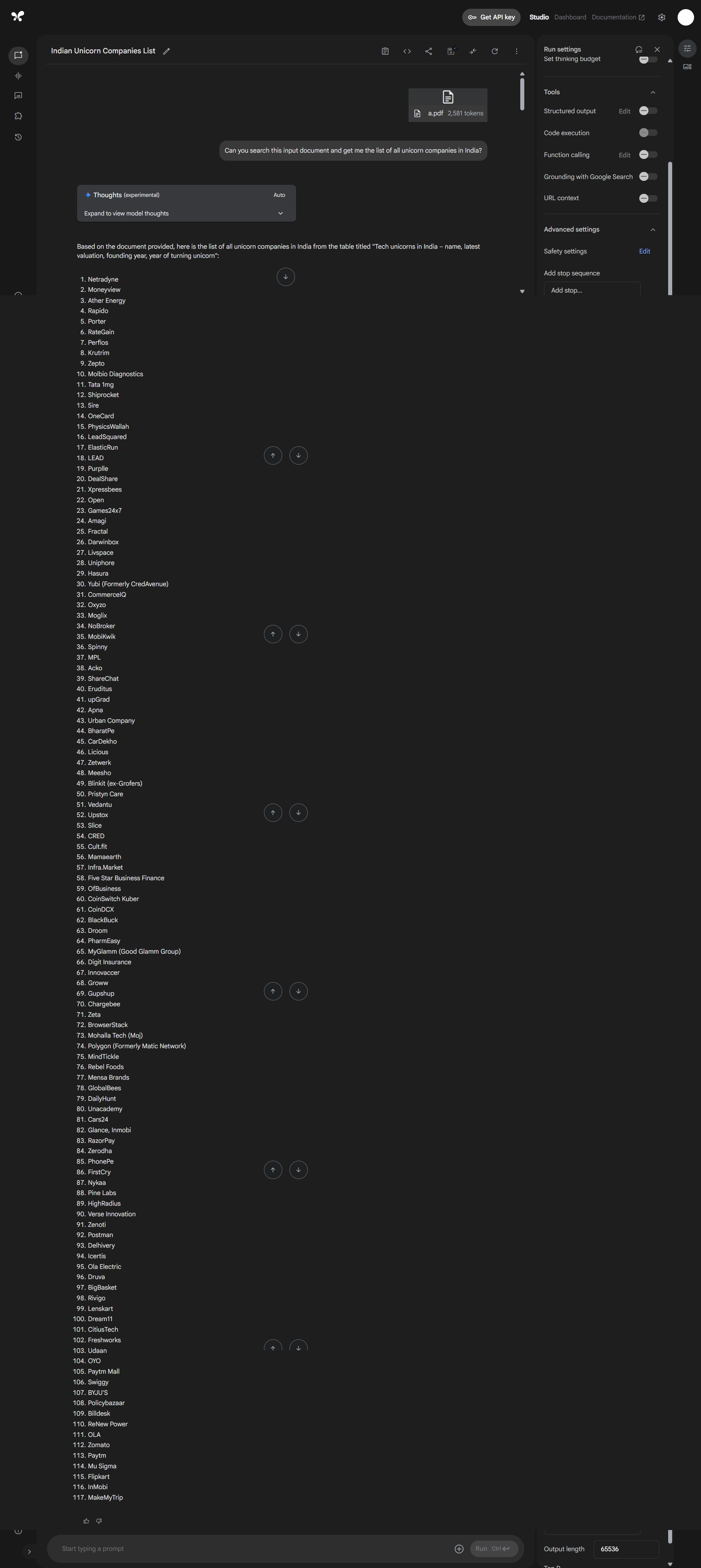

Contrast this with the response below, where the “grounding with web search” is switched off and the underlying data is provided by the user.

This below is the exact pdf that I provided gemini-2.5-pro and this has the list of 117 unicorns from https://startuptalky.com/top-unicorn-startups-india/ (the third source quoted in the response above). I wrapped unrelated text around the list (taken from a b2b marketplaces report by Elevation Capital) to check if that can throw the LLM off.

User Supplied Data Input to Gemini

But this time around, gemini did exactly what it was asked to do. Just look at the improvement in the answer below - not just the quality (it provided the list of 117 unicorns present in the input), but even the format is so much better - no fluff or summaries or sub categories, just what was asked for. And mind you, the input has the unicorn list as a table with many more other columns.

Now, this is a response that you can readily use in your work. And you got to this, by switching off a powerful capability of the LLM and taking greater control over how it operated vs. in the first example.

You can try this experiment with other LLMs as well and I suspect you will encounter similar results. ChatGPT and gemini’s deep research (which is a different interface from gemini through Google AI Studio) tripped themselves worse and produced even shorter lists of names, in spite of trawling even more data sources. I haven’t tried this experiment with the gemini API, coz there web searches are not free, unlike in Google AI Studio. If you got coin to burn on this pursuit, go ahead.

In summary, LLMs are great at comprehending what the users want and that has been the case for a while now, but letting them figure out what data to use as inputs (& possibly how to use these inputs) is a bridge too far, even now. So, whenever you are looking for a (near) comprehensive list of “things of a kind” and the list isn’t short (>20 items), don’t let you LLMs loose and preferably, give them the data that they need.